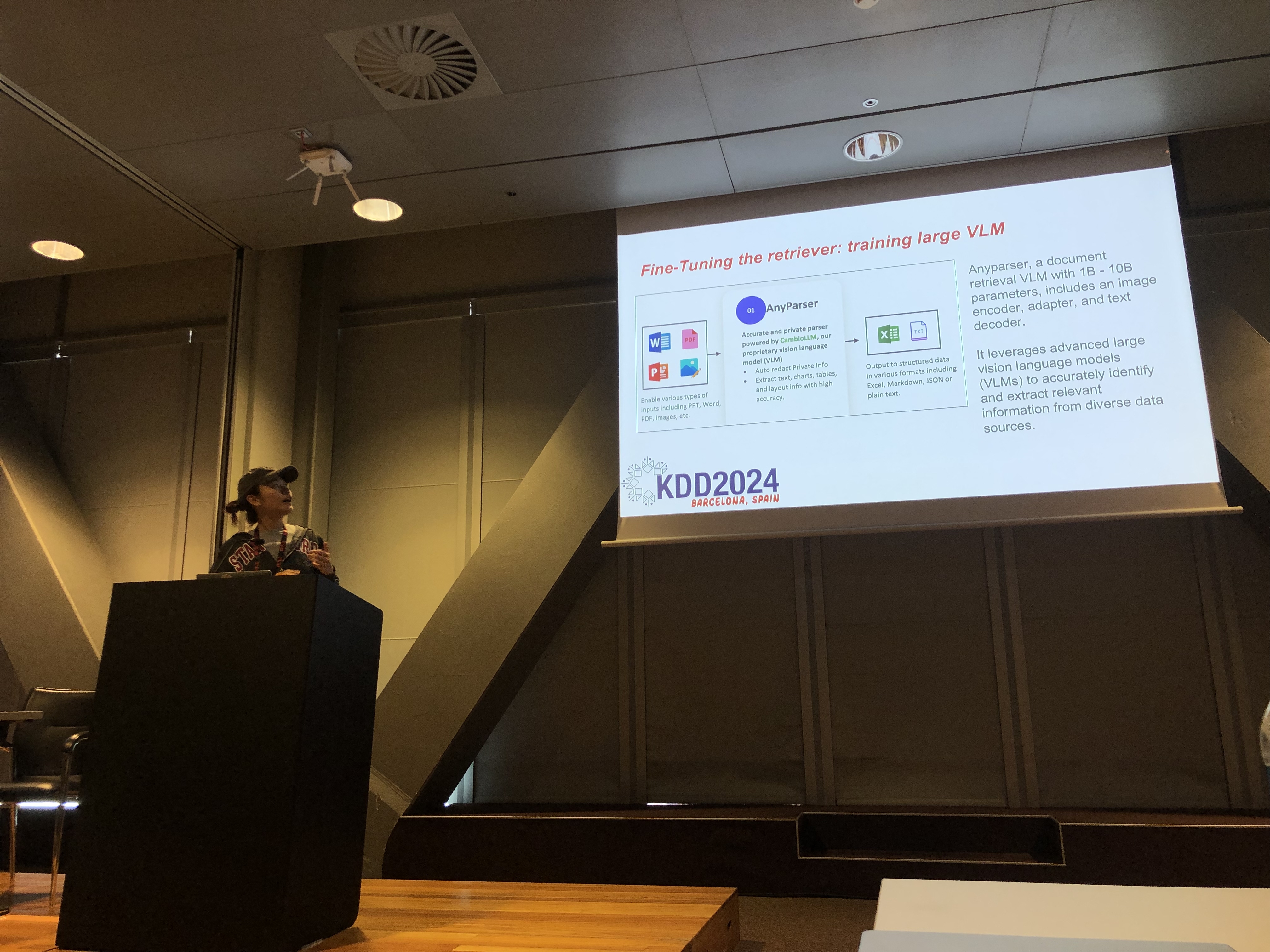

Rachel Hu presenting at the KDD 2024 conference

At the KDD 2024 conference, Rachel Hu, Co-founder and CEO of CambioML, presented a comprehensive tutorial on optimizing Large Language Models (LLMs) for domain-specific applications, alongside co-presenters José Cassio dos Santos Junior (Amazon), Richard Song (Epsilla), and Yunfei Bai (Amazon). The session provided in-depth insights into two critical techniques: Retrieval Augmented Generation (RAG) and LLM Fine-Tuning. These methods are essential for improving the performance of LLMs in specialized fields, allowing developers to create more effective and accurate models tailored to specific tasks.

Retrieval Augmented Generation (RAG) is a powerful approach that extends the capabilities of LLMs by integrating external knowledge bases. This technique enables LLMs to generate responses based on specific domain knowledge without requiring extensive retraining. RAG is particularly beneficial for organizations that need to leverage internal knowledge bases or other specialized resources, providing a way to enhance LLM performance in a cost-effective and time-efficient manner.

LLM Fine-Tuning involves adjusting the model's weights using domain-specific data, allowing the model to systematically learn new, comprehensive knowledge that wasn't included during the pre-training phase. This approach is essential for tasks requiring a high degree of accuracy and is particularly effective in domains where general-purpose models fall short. Fine-Tuning can transform an LLM into a highly specialized tool, capable of performing complex, domain-specific tasks with precision.

The tutorial explored how combining RAG and Fine-Tuning can create a robust architecture for LLM applications. By integrating these two approaches, developers can build models that not only access the most relevant external information but also learn from domain-specific data. This hybrid approach allows for the creation of models that are both versatile and highly accurate, capable of handling a wide range of domain-specific tasks, from text generation to complex question-answering scenarios.

A significant part of Rachel's tutorial was dedicated to hands-on labs, where participants explored advanced techniques to optimize RAG and Fine-Tuned LLM architectures. The labs covered a variety of topics, including:

The tutorial concluded with a set of best practices for implementing RAG and Fine-Tuning in real-world applications. Emphasizing the importance of understanding the trade-offs between RAG's flexibility and Fine-Tuning's precision, participants were encouraged to engage in continuous experimentation and benchmarking. This approach ensures that performance and cost-effectiveness criteria are met, allowing developers to optimize their LLM architecture for domain-specific tasks effectively.

For a more detailed overview of the tutorial's content and hands-on labs, please refer to this paper and this presentation.